Agenda

Agenda

Agenda

Agenda

09:00-09:30

Registration, badges, kit-bag pick-up

Location: Outside Salsa Lounge

09:00-09:30

Registration, badges, kit-bag pick-up

Location: Outside Salsa Lounge

09:30-10:00

Welcome to the workshop - overview & goals

Ritu Arora, TACC

09:30-10:00

Welcome to the workshop - overview & goals kit-bag pick-up

Ritu Arora, TACC

10:00-10:30

Advanced Cyberinfrastructure in Science and Engineering

Vipin Chaudhary, NSF

10:00-10:30

Advanced Cyberinfrastructure in Science and Engineering

Vipin Chaudhary, NSF

Abstract: Advanced cyberinfrastructure and the ability to perform large-scale simulations and accumulate massive amounts of data have revolutionized scientific and engineering disciplines. In this talk I will give an overview of the National Strategic Computing Initiative (NSCI) that was launched by Executive Order (EO) 13702 in July 2015 to advance U.S. leadership in high performance computing (HPC). The NSCI is a whole-of-nation effort designed to create a cohesive, multi-agency strategic vision and Federal investment strategy, executed in collaboration with industry and academia, to maximize the benefits of HPC for the United States. I will then discuss NSF’s role in NSCI and present three cross-cutting software programs ranging from extreme scale parallelism to supporting robust, reliable and sustainable software that will support and advance sustained scientific innovation and discovery.

Bio: A veteran of High Performance Computing (HPC), Dr. Chaudhary has been actively participating in the science, business, government, and technology innovation frontiers of HPC for over two decades. His contributions range from heading research laboratories and holding executive management positions, to starting new technology ventures. He is currently a Program Director in the Office of Advanced Cyberinfrastructure at National Science Foundation. He is Empire Innovation Professor of Computer Science and Engineering at the Center for Computational Research at the New York State Center of Excellence in Bioinformatics and Life Sciences at SUNY Buffalo, and the Director of the university’s Data Intensive Computing Initiative. He is also the co-founder of the Center for Computational and Data-Enabled Science and Engineering.

He cofounded Scalable Informatics, a leading provider of pragmatic, high performance software-defined storage and compute solutions to a wide range of markets, from financial and scientific computing to research and big data analytics. From 2010 to 2013, Dr. Chaudhary was the Chief Executive Officer of Computational Research Laboratories (CRL) where he grew the company globally to be an HPC cloud and solutions leader before selling it to Tata Consulting Services. Prior to this, as Senior Director of Advanced Development at Cradle Technologies, Inc., he was responsible for advanced programming tools for multi-processor chips. He was also the Chief Architect at Corio Inc., which had a successful IPO in June, 2000.

Dr. Chaudhary’s research interests are in High Performance Computing and Applications to Science, Engineering, Biology, and Medicine; Big Data; Computer Assisted Diagnosis and Interventions; Medical Image Processing; Computer Architecture and Embedded Systems; and Spectrum Management. He has published approximately 200 papers in peer-reviewed journals and conferences and has been the principal or co-principal investigator on over $28 million in research projects from government agencies and industry. Dr. Chaudhary was awarded the prestigious President of India Gold Medal in 1986 for securing the first rank amongst graduating students at the Indian Institute of Technology (IIT). He received the B.Tech. (Hons.) degree in Computer Science and Engineering from the Indian Institute of Technology, Kharagpur, in 1986 and a Ph.D. degree from The University of Texas at Austin in 1992.

Abstract: Advanced cyberinfrastructure and the ability to perform large-scale simulations and accumulate massive amounts of data have revolutionized scientific and engineering disciplines. In this talk I will give an overview of the National Strategic Computing Initiative (NSCI) that was launched by Executive Order (EO) 13702 in July 2015 to advance U.S. leadership in high performance computing (HPC). The NSCI is a whole-of-nation effort designed to create a cohesive, multi-agency strategic vision and Federal investment strategy, executed in collaboration with industry and academia, to maximize the benefits of HPC for the United States. I will then discuss NSF’s role in NSCI and present three cross-cutting software programs ranging from extreme scale parallelism to supporting robust, reliable and sustainable software that will support and advance sustained scientific innovation and discovery.

Bio: A veteran of High Performance Computing (HPC), Dr. Chaudhary has been actively participating in the science, business, government, and technology innovation frontiers of HPC for over two decades. His contributions range from heading research laboratories and holding executive management positions, to starting new technology ventures. He is currently a Program Director in the Office of Advanced Cyberinfrastructure at National Science Foundation. He is Empire Innovation Professor of Computer Science and Engineering at the Center for Computational Research at the New York State Center of Excellence in Bioinformatics and Life Sciences at SUNY Buffalo, and the Director of the university’s Data Intensive Computing Initiative. He is also the co-founder of the Center for Computational and Data-Enabled Science and Engineering.

He cofounded Scalable Informatics, a leading provider of pragmatic, high performance software-defined storage and compute solutions to a wide range of markets, from financial and scientific computing to research and big data analytics. From 2010 to 2013, Dr. Chaudhary was the Chief Executive Officer of Computational Research Laboratories (CRL) where he grew the company globally to be an HPC cloud and solutions leader before selling it to Tata Consulting Services. Prior to this, as Senior Director of Advanced Development at Cradle Technologies, Inc., he was responsible for advanced programming tools for multi-processor chips. He was also the Chief Architect at Corio Inc., which had a successful IPO in June, 2000.

Dr. Chaudhary’s research interests are in High Performance Computing and Applications to Science, Engineering, Biology, and Medicine; Big Data; Computer Assisted Diagnosis and Interventions; Medical Image Processing; Computer Architecture and Embedded Systems; and Spectrum Management. He has published approximately 200 papers in peer-reviewed journals and conferences and has been the principal or co-principal investigator on over $28 million in research projects from government agencies and industry. Dr. Chaudhary was awarded the prestigious President of India Gold Medal in 1986 for securing the first rank amongst graduating students at the Indian Institute of Technology (IIT). He received the B.Tech. (Hons.) degree in Computer Science and Engineering from the Indian Institute of Technology, Kharagpur, in 1986 and a Ph.D. degree from The University of Texas at Austin in 1992.

10:30-11:00

A User-Defined Code Transformation Approach to Separation of Performance Concerns

Hiroyuki Takizawa, Tohoku University

10:30-11:00

A User-Defined Code Transformation Approach to Separation of Performance Concerns

Hiroyuki Takizawa, Tohoku University

Abstract: Today, high-performance computing (HPC) application codes are often optimized and specialized for a particular system configuration to exploit the system's potential. One severe problem is that simply modifying an HPC application code often results in degrading the performance portability, readability, and maintainability of the code. Therefore, we have been developing a code transformation framework, Xevolver, so that users can easily define their own code transformation rules for individual cases, in order to express how each application code should be changed to achieve high performance. In this talk, I will briefly review the Xevolver framework and introduce some case studies to discuss the benefits of the user-defined code transformation approach.

Bio: Hiroyuki Takizawa is currently a professor of Cyberscience Center, Tohoku University. His research interests include performance-aware programming, high-performance computing systems and their applications. Since 2011, he is leading a research project, supported by JST CREST, to explore an effective way of assisting legacy HPC code migration to future-generation extreme-scale computing systems. He received the B.E. Degree in Mechanical Engineering, and the M.S. and Ph.D. Degrees in Information Sciences from Tohoku University in 1995, 1997 and 1999, respectively.

Abstract: Today, high-performance computing (HPC) application codes are often optimized and specialized for a particular system configuration to exploit the system's potential. One severe problem is that simply modifying an HPC application code often results in degrading the performance portability, readability, and maintainability of the code. Therefore, we have been developing a code transformation framework, Xevolver, so that users can easily define their own code transformation rules for individual cases, in order to express how each application code should be changed to achieve high performance. In this talk, I will briefly review the Xevolver framework and introduce some case studies to discuss the benefits of the user-defined code transformation approach.

Bio: Hiroyuki Takizawa is currently a professor of Cyberscience Center, Tohoku University. His research interests include performance-aware programming, high-performance computing systems and their applications. Since 2011, he is leading a research project, supported by JST CREST, to explore an effective way of assisting legacy HPC code migration to future-generation extreme-scale computing systems. He received the B.E. Degree in Mechanical Engineering, and the M.S. and Ph.D. Degrees in Information Sciences from Tohoku University in 1995, 1997 and 1999, respectively.

11:00-11:15

COFFEE BREAK & NETWORKING

11:00-11:15

COFFEE BREAK & NETWORKING

11:15-11:45

Technologies for Exascale Computing

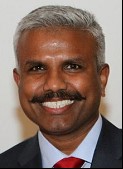

Nash Palaniswamy, Intel

11:15-11:45

Technologies for Exascale Computing

Nash Palaniswamy, Intel

Abstract: Intel is investing in a broad set of technologies to move computing to the address the challenges of Exascale computing. These technologies are targeted to reach next generation performance in a configurable system that can achieve exceptional performance in data analytics, traditional high performance computing and artificial intelligence. Being able to address all of these application domains is of critical importance.

Intel is investing in processors, fabric, memory and software. Each will be discussed along with their respective importance in achieving Exascale.

Bio: Dr. Nash Palaniswamy has been at Intel since October 2005, and focuses in the area of Enterprise and High Performance Computing in the Datacenter group. He is currently the Senior Director for Worldwide Solutions Enablement and Revenue Management for Enterprise and HPC. In this role, he is responsible for managing all strategic opportunities in Enterprise and HPC and managing and meeting revenue for the Enterprise and Government segment in Intel’s datacenter group. Dr. Palaniswamy leads a team that drives strategic opportunities worldwide (solutions, architecture, products, business frameworks, etc) in collaboration with Intel’s ecosystem partners.

His prior responsibilities at Intel included being the lead for worldwide business development and operations for Intel® Technical Computing Solutions, Intel® QuickAssist Technology based accelerators in HPC, and World Wide Web Consortium Advisory Committee representative from Intel. Prior to joining Intel as part of the acquisition of Conformative Systems, an XML Accelerator Company, he has served in several senior executive positions in the industry including being the Director of System Architecture at Conformative Systems, CTO/VP of Engineering at MSU Devices (a publicly traded company), and Director of Java Program Office and Wireless Software Strategy in the Digital Experience Group of Motorola, Inc.

Dr. Palaniswamy holds a B.S. in Electronics and Communications Engineering from Anna University (Chennai, India) and an M.S. and Ph.D. from the University of Cincinnati in Electrical and Computer Engineering.

Abstract: Intel is investing in a broad set of technologies to move computing to the address the challenges of Exascale computing. These technologies are targeted to reach next generation performance in a configurable system that can achieve exceptional performance in data analytics, traditional high performance computing and artificial intelligence. Being able to address all of these application domains is of critical importance.

Intel is investing in processors, fabric, memory and software. Each will be discussed along with their respective importance in achieving Exascale.

Bio: Dr. Nash Palaniswamy has been at Intel since October 2005, and focuses in the area of Enterprise and High Performance Computing in the Datacenter group. He is currently the Senior Director for Worldwide Solutions Enablement and Revenue Management for Enterprise and HPC. In this role, he is responsible for managing all strategic opportunities in Enterprise and HPC and managing and meeting revenue for the Enterprise and Government segment in Intel’s datacenter group. Dr. Palaniswamy leads a team that drives strategic opportunities worldwide (solutions, architecture, products, business frameworks, etc) in collaboration with Intel’s ecosystem partners.

His prior responsibilities at Intel included being the lead for worldwide business development and operations for Intel® Technical Computing Solutions, Intel® QuickAssist Technology based accelerators in HPC, and World Wide Web Consortium Advisory Committee representative from Intel. Prior to joining Intel as part of the acquisition of Conformative Systems, an XML Accelerator Company, he has served in several senior executive positions in the industry including being the Director of System Architecture at Conformative Systems, CTO/VP of Engineering at MSU Devices (a publicly traded company), and Director of Java Program Office and Wireless Software Strategy in the Digital Experience Group of Motorola, Inc.

Dr. Palaniswamy holds a B.S. in Electronics and Communications Engineering from Anna University (Chennai, India) and an M.S. and Ph.D. from the University of Cincinnati in Electrical and Computer Engineering.

11:45-12:15

Overcoming Deployment and Configuration Challenges in High Performance Computing via Model-driven Engineering Technologies

Aniruddha Gokhale, Vanderbilt University

11:45-12:15

Overcoming Deployment and Configuration Challenges in High Performance Computing via Model-driven Engineering Technologies

Aniruddha Gokhale, Vanderbilt University

Abstract: As systems scale in size and complexity, reasoning about their properties and controlling their behavior requires complex simulations, which often involves multiple interacting co-simulators that must be deployed and configured on high performance computing resources. Increasingly, cloud platforms which may even be federated, offer cost-effective solutions to realize such deployments. However, researchers and practitioners alike often face a plethora of challenges stemming from the need for rapid provisioning/deprovisioning, ensuring reliability, defining strategies for autoscaling against changing workloads, handling resource unavailabilities, and exploiting modern features such as GPUs, FPGAs, and NUMA architectures to name a few, for which they generally tend to lack the expertise to overcome these challenges. Model-driven engineering (MDE) offers significant promise to address these challenges by providing the users with intuitive abstractions and automating the deployment and configuration tasks. This talk describes our ongoing work in this space and will highlight both the MDE and systems solutions that we are investigating.

Bio: Dr. Aniruddha S. Gokhale is an Associate Professor in the Department of Electrical Engineering and Computer Science, and Senior Research Scientist at the Institute for Software Integrated Systems (ISIS) both at Vanderbilt University, Nashville, TN, USA. His current research focuses on developing novel solutions to emerging challenges in edge-to-cloud computing, real-time stream processing, and publish/subscribe systems as applied to cyber physical systems including smart transportation and smart cities. He is also working on using cloud computing technologies for STEM education. Dr. Gokhale obtained his B.E (Computer Engineering) from University of Pune, India, 1989; MS (Computer Science) from Arizona State University, 1992; and D.Sc (Computer Science) from Washington University in St. Louis, 1998. Prior to joining Vanderbilt, Dr. Gokhale was a member of technical staff at Lucent Bell Laboratories, NJ. Dr. Gokhale is a Senior member of both IEEE and ACM, and a member of ASEE. His research has been funded over the years by DARPA, DoD, industry and NSF including a NSF CAREER award in 2009.

Abstract: As systems scale in size and complexity, reasoning about their properties and controlling their behavior requires complex simulations, which often involves multiple interacting co-simulators that must be deployed and configured on high performance computing resources. Increasingly, cloud platforms which may even be federated, offer cost-effective solutions to realize such deployments. However, researchers and practitioners alike often face a plethora of challenges stemming from the need for rapid provisioning/deprovisioning, ensuring reliability, defining strategies for autoscaling against changing workloads, handling resource unavailabilities, and exploiting modern features such as GPUs, FPGAs, and NUMA architectures to name a few, for which they generally tend to lack the expertise to overcome these challenges. Model-driven engineering (MDE) offers significant promise to address these challenges by providing the users with intuitive abstractions and automating the deployment and configuration tasks. This talk describes our ongoing work in this space and will highlight both the MDE and systems solutions that we are investigating.

Bio: Dr. Aniruddha S. Gokhale is an Associate Professor in the Department of Electrical Engineering and Computer Science, and Senior Research Scientist at the Institute for Software Integrated Systems (ISIS) both at Vanderbilt University, Nashville, TN, USA. His current research focuses on developing novel solutions to emerging challenges in edge-to-cloud computing, real-time stream processing, and publish/subscribe systems as applied to cyber physical systems including smart transportation and smart cities. He is also working on using cloud computing technologies for STEM education. Dr. Gokhale obtained his B.E (Computer Engineering) from University of Pune, India, 1989; MS (Computer Science) from Arizona State University, 1992; and D.Sc (Computer Science) from Washington University in St. Louis, 1998. Prior to joining Vanderbilt, Dr. Gokhale was a member of technical staff at Lucent Bell Laboratories, NJ. Dr. Gokhale is a Senior member of both IEEE and ACM, and a member of ASEE. His research has been funded over the years by DARPA, DoD, industry and NSF including a NSF CAREER award in 2009.

12:15-12:45

The EX Factor in the Exascale Era: Factors driving changes in HPC

Bharatkumar Sharma, Nvidia

12:15-12:45

The EX Factor in the Exascale Era: Factors driving changes in HPC

Bharatkumar Sharma, Nvidia

Abstract: GPU’s has been used to accelerate HPC algorithms which are based on first principles theory and are proven statistical models for accurate results in multiple science domains. This talk will provide insights into the HPC domain and how it affects the programs you write today and in the future in various domains.

Bio: Bharatkumar Sharma obtained master degree in Information Technology from Indian Institute of Information Technology, Bangalore. He has around 10 years of development and research experience in domain of Software Architecture, Distributed and Parallel Computing. He is currently working with Nvidia as a Senior Solution Architect, South Asia. He has published papers and journal articles in field of Parallel Computing and Software Architecture.

Abstract: GPU’s has been used to accelerate HPC algorithms which are based on first principles theory and are proven statistical models for accurate results in multiple science domains. This talk will provide insights into the HPC domain and how it affects the programs you write today and in the future in various domains.

Bio: Bharatkumar Sharma obtained master degree in Information Technology from Indian Institute of Information Technology, Bangalore. He has around 10 years of development and research experience in domain of Software Architecture, Distributed and Parallel Computing. He is currently working with Nvidia as a Senior Solution Architect, South Asia. He has published papers and journal articles in field of Parallel Computing and Software Architecture.

12:45-13:45

LUNCH BREAK & NETWORKING

12:45-13:45

LUNCH BREAK & NETWORKING

13:45-14:50

- Neethi Suresh, Lois Thomas and Bipin Kumar, "DNS for large domains: Challenges for computation and storage"

Abstract: Clouds play a very important role in modulating climate by controlling radiation received at the Earth surface. In spite of their importance, their dynamics are not well understood due to simulation limitations. The lack of understanding of the hydrodynamics and the micro-physical processes in clouds causes major uncertainties in current atmospheric general circulation models. Direct Numerical Simulation (DNS) is a three dimensional simulation approach to simulate turbulent flows at smallest scale where the equations are solved explicitly without any approximation. Running the DNS in bigger domain is compute intensive and lengthy. Hence, prior knowledge of the resources required to run DNS code can be of great advantage. A Numerical Simulation of entrainment and mixing process at cloud interface to study droplet dynamics has been carried out for smaller domains and the time to run code for bigger domain has been projected using linear regression formula. Projected time for bigger domain size will provide researcher to estimate the wall clock time as well as storage requirement, based on available computational resources, to run DNS. The simulation is carried out for different size domains and optimized number of cores has been suggested based on scaling factors for different domains. Further a 17% gain in computational time is achieved by amending algorithm, where only sparse operations are considered in place of full dense data. Use of pnetcdf library has also provided advantages over conventional I/O format.

Presentation Slides:

DNS for large domains: Challenges for computation and storage

Manu Awasthi, "DRAM Organization Aware OS Page Allocation"

Abstract: The applications in HPC and datacenter domains are beginning to have increasingly larger memory footprints running into multiple tens of gigabytes. Contemporary HPC server also have multiple NUMA nodes and the NUMA factor is increasing at an alarming pace with the increase in number of NUMA nodes. As a result, the average cost of main memory access has also been increasing. Furthermore, for a typical HPC application, DRAM access latencies are a function of 1) the memory allocation policies of OS (across NUMA nodes) and 2) data layout in DRAM DIMMs (per-NUMA node).

A DRAM DIMM has a hierarchical structure where it is divided into ranks, banks, rows and columns. There are multiple such DIMMs on each channel and there could be multiple channels emanating from one memory controller. Furthermore, there could be multiple memory controllers per NUMA node. Data layout across DRAM DIMMs and channels is a function of memory controller specific policies like address mapping schemes, which potentially can differ between multiple controllers on the same node.

The OS allocates memory at the granularity of pages. Since the OS has no knowledge of the underlying architecture and organization of per-NUMA node DRAM, each OS page could potentially be concentrated to a subset of available channels, DIMMs, ranks, banks and arrays, putting them under pressure and reducing performance. In order to get most benefit out of existing architectures, it is necessary that the OS allocate memory (at page granularity) while being cognizant of the inner architecture and organization of per-NUMA node DRAM.

In this proposal, we propose mechanisms where the OS, in addition to reducing memory allocation for processes across NUMA nodes, also takes into account the organization of DRAM DIMMs (channels, ranks, banks, rows, columns etc.) for every memory controller. This will help the OS make page allocation decisions which maximize the use of available parallelism, increase locality and hence reduce overall memory access latency, decreasing application runtimes.

Presentation Slides:

DRAM Organization Aware OS Page Allocation

Gouri Kadam and Shweta Nayak, "Implementation of OpenSource Structural Engineering Application OpenSees on GPU platform"

Abstract: GPU computing is emerging as an alternative to CPUs for throughput oriented applications because of their number of cores embedded on the same chip and speed-up in computing. GPUs provide general purpose computing using dedicated libraries such as CUDA (for NVIDIA cards, based on a SIMD architecture that makes it possible to handle a very large number of hardware threads concurrently, and can be used for various purposes. This paper explains customization of a well-known open source earthquake engineering application OpenSees on hybrid platform using GPU enabled open source libraries.

Available GPU enabled version of OpenSees provided by Xinzheng Lu, Linlin Xie (Tsinghua University, China) uses CulaS4 and CulaS5, which use the Cula library and currently supported on window’s platform. Both of these limits the usage of OpenSees as an open source platform. To overcome this, we have modified existing CuSPSolver to use only freely available CUSP library. Speed up improvement is achieved by diverting analysis component to GPU architecture

GPU enablement will help researchers and scientists to use this open-source platform for their research in structural and earthquake engineering domain using OpenSees. Also as there are minor changes required to be done in the input script and no great programming efforts required to run it using GPU enabled OpenSees, these modifications are very user friendly. Speedup around 2.14x is observed when tested with some examples. Different types of examples were studied to compare the results and it has been observed that performance will increases with increase in complexity of the problem. Nodal displacement results of one of the example were compared with CPU and GPU simulations which were matching. This validates the methodology used for GPU enablement. These modifications were also integrated in the mainstream of OpenSees code at Berkley and now available to all structural engineering community. All the study carried out is for single CPU and single GPU. There is lot of scope to extend this work for multi GPU, using other accelerators and using OpenCL.

Presentation Slides:

Implementation of OpenSource Structural Engineering Application OpenSees on GPU platform

Mangala N, Deepika H.V, Prachi Pandey and Shamjith K V, "Adaptive Resource Allocation Technique for Exascale Systems"

Abstract:The future exascale systems are expected to have numerous heterogeneous computing resources in order to achieve computational performance against the energy consumption guidelines. To harness these computing resources it is essential to have hybrid applications that can exploit a combination of CPU and other accelerators for execution. To optimally exploit the cluster performance offered by the heterogeneous architecture, it is necessary to schedule the application based on their exhibited characteristics. However, scheduling hybrid parallel applications is a challenging task as a single resource allocation algorithm should be able to handle multiple aspects of heterogeneity, application characteristics and accelerators’ capabilities while ensuring to achieve optimal resource utilization and good response time to all the jobs. We propose a novel resource allocation technique which makes an in-depth logical scheduling decision based on multiple criteria - characterisation of the hybrid applications, energy conservation, and network topology with resource proximity for allocation. The knowledge of prior job execution is used to self evolve the optimal resource selection mechanism. A unique enhancement has been added in the scheduler to circumvent long waiting time by dynamically adapting applications to alternate resource through re-targeting the device at runtime. This technique helps to reduced waiting time for getting the required resource, and hence improves the turnaround time for the user. The resource allocator considers the challenges of exascale scenario and orchestrates a solution to help HPC users to get their applications executed with less waiting time with better efficiency, and assures effective resource utilization from the HPC resource administrator’s perspective.

Presentation Slides:

Adaptive Resource Allocation Technique for Exascale Systems

Shreya Bokare, Sanjay Pawar and Veena Tyagi."Network coded Storage I/O subsystem for HPC exascale applications"

Abstract: Tremendous data growth in exascale HPC applications will generate variety of

datasets. This will impose two important challenges on the storage I/O system i) efficiently

process large data sets, ii) improved fault tolerance. The exascale storage systems are

expected to be capable enough providing bandwidth guarantee and applying coding scheme

optimized for the type of data set.

Traditional RAID architecture in HPC storage system is being replaced with network coding

techniques to provide optimal fault tolerance for data. There exists variation in network

codes based on traditional erasure (reed-Solomon) with number of libraries

implementations, which notably differ in terms of complexity and implementation for

specific optimizations.

We propose an I/O subsystem to provide bandwidth guarantee along with optimized fault

tolerance for variety of HPC/big datasets. The proposed storage I/O subsystem consists of

software defined storage gateways and controller working along with filesystem MDS. We

propose an I/O caching mechanism with hierarchical storage structure embedded at storage

gateway. The designed caching policy will cache smaller files on faster storage drives (like

SSD’s) eliminating long access latency for smaller sized files. The storage gateways are

running with systematic erasure (RS) code, with preconfigured encoding-decoding

algorithms. At each gateway, network coding (erasure coding encode-decode) is performed

at multiple tiers with specified parameter. Geometric programming is used to calculate

parameters at each tier based on data demand. The proposed system provides bandwidth

guarantee with caching mechanism at hierarchical storage layers. Flexible erasure coding

provides optimized fault tolerance for variety of application datasets.

Future work will focus on design of flexible caching and replacement policy for real time

workload and an adaptive encode-decode algorithm for highly skewed data demand.

Presentation Slides:

NETWORK CODED STORAGE I/O SUBSYSTEM FOR HPC EXASCALE APPLICATIONS

Manavalan R."Application level challenges and issues of processing different frequency, polarization and incidence angle Synthetic Aperture Radar data using distributed computing resources"

Abstract:The role and need of Synthetic Aperture Radar (SAR) technology in various geospatial applications is proved beyond the doubts as SAR can make available critical information about on the filed information with centimeter to millimeter accuracy. The science of processing such SAR data using the distributed computing resources is more than two decade old and as of now been broadly bolstered by worldwide HPC labs for example, ESA’s G-POD (Grid Processing on Demand), Peppers (A Performance Emphasized Production Environment for Remote Sensing), DLR distributed SAR data processing environment, Center for Earth Observation and Digital Earth (CEODE) of Chinese Academy of Sciences (CAS),..etc. This paper discusses the difficulties and issues of Geospatial application users who are working with multiple sets of voluminous temporal SAR data and brings out the importance of developing and deploying all-purpose HPC based infrastructure environment that can meet the expectations of application users. Specific situations which require simultaneous processing of different frequency, polarization and incidence angle SAR data using the distributed HPC resources and related requirements will be discussed. For example during the SAR raw data processing, the need and importance of simultaneous processing and extraction of different polarization images as well as in completing its related post processing tasks and mapping operations will be highlighted. When the same has to be done at a regional scale, mainly to simulate large scale disaster events with various multi-look factors as well as with different post processing filtering options the need of developing and deploying such real time solutions on an exascale computing environment can be well understood. As on date such large scale complex SAR application model supporting the above mentioned expectations is yet to be developed. In line to this this talk will brings out the importance of prototyping such HPC based SAR data processing environment which can support the complete application cycle of real time regional disaster management simulations and its related operations. Any such operational setup certainly needs effective coordination of worldwide space agencies as well as distributed HPC labs.

Presentation Slides:

‘Application level’ challenges and issues of processing different frequency, polarization and incidence angle Synthetic Aperture Radar data using distributed computing resources

Venkatesh Shenoi, Janakiraman S and Sandeep Joshi, "Towards energy efficient numerical weather prediction Scalable algorithms and approaches"

Abstract: The weather forecasting has a major impact on the society. The meteorologists have been using the

numerical weather prediction models for the operation forecast for over several decades ever since

Richardson's attempt towards numerical models for weather prediction. The weather codes have

consumed a good portion of the computational power available in several supercomputing centers.

This has led to the need to improve the computational efficiency of the codes/models to achieve

better resolution moving towards the better accuracy of the forecasts. However, these large scale

computations are possible only at the expense of the huge energy budget due to the increasing

requirement of computational resources as well as the cooling infrastructure required to maintain

them. But with the energy budget fixed at 20 MW it is even more challenging as we are compelled

to move towards energy efficiency of the weather codes. We focus towards the spectral transform

method as case study for this talk. This method has been in use in NCAR and ECMWF for the

operational forecasts.

In this talk, we shall discuss the basics of numerical weather predictions and move towards the test

bed shallow water model to be solved by spectral transform method. The highlights of the approach

with regard to algorithm, its scalability and improvements leading to the reduction in the

computational complexity will be discussed. To conclude, some of the recent efforts on scaling up

the solver for shallow water equations are discussed along with the glimpse of the ongoing efforts in

the ESCAPE project. This talk is inspired by our project proposal on “Scalable algorithmic

approach to spectral transform method” to be pursued under NSM, India.

Presentation Slides:

Towards Energy Efficient Numerical Weather Prediction

14:50-15:10

Narendra Karmarkar, VCV Rao, "MPPLAB(E-Teacher)"

Abstract: HPC has huge future scope for scientific applications, large data bases, AI/deep learning, business applications in financial industry, telecom (particularly 5G) etc. Unfortunately, present systems are serving the market with separate products, in a rather fragmented manner. Challenge is to create a "Unified Architecture" that will unify the user space, following a "Top-down" approach instead of “Bottom-up" approach which forces applications to try to fit their code to peculiarities of particular cpu's, accelerators, system architecture etc. Today's potential of HPC and silicon technology is grossly underutilized due to effort and time spent in tailoring parallel code to specific machines. Incorporation of FPGA-based reconfigurability in general applications is getting delayed unnecessarily. If economic benefits based on what is eminently feasible technologically, are not delivered to the society quickly enough, it slows down investments in further technological enhancements. All HPC users will benefit from this in the long run. At the same time, we are focusing on how very complex code can be put together in shorter time-span, and in such a way that investment in top-level code design is long-lived in face of anticipated changes in successive generations of chips, interfaces etc. Due to vast scope, we will present only a broad overview and elaborate only on couple of aspects. Improving programmer productivity by designing and writing parallel code at multiple levels of abstraction by providing more expressive notations, tools for transforming one level to the next is required. It is also necessary to do away with artificial boundary between hardware description languages and how far traditional compilers reach starting from high level languages. This will ensure more seamless utilization of FPGA-based re-configurability in the unified system architecture. Since hardware aspects are too complex, only one particular aspect related to GPU's will be covered. Initially, we’ll be addressing application of optimization algorithms to economic modelling and telecom systems.

Bio: VCV.Rao received his Master degree in Mathematics from Andhra University, India in 1985 and Ph.D degree in Mathematics from IIT-Kanpur in the year 1993. He is associated with C-DAC since 1993 on High Performance Computing projects. He contributed to design, develop and deploy C-DAC’s PARAM Series of Supercomputers, GARUDA Grid Computing project, Parallel Computing workshops, contributed to PARAM series at premier academic Institutions. Currently, he is an Associate Director in the High-Performance Computing Technologies (HPC-Tech) Group, at C-DAC, Pune.

Bio: Karmarkar received his B.Tech in EE from IIT Bombay in 1978, M.S. from the California Institute of Technology in 1979 and Ph.D. in Computer Science from the University of California, Berkeley in 1983. He is well known for linear programming algorithms - a cornerstone in the field of Linear Programming. He is a Fellow of Bell Laboratories (1987 onwards). In 2006-2007, he served as scientific advisor to the Chairman Tata group, founded CRL and architected "EKA" system, which stands for "Embedded Karmarkar Algorithm”. Currently, he is a Consultant Chief Architect in C-DAC, Pune. He is also a distinguished visiting professor at several institutes such as IISc, and IITs.

13:45-14:50

- Neethi Suresh, Lois Thomas and Bipin Kumar, "DNS for large domains: Challenges for computation and storage"

Abstract: Clouds play a very important role in modulating climate by controlling radiation received at the Earth surface. In spite of their importance, their dynamics are not well understood due to simulation limitations. The lack of understanding of the hydrodynamics and the micro-physical processes in clouds causes major uncertainties in current atmospheric general circulation models. Direct Numerical Simulation (DNS) is a three dimensional simulation approach to simulate turbulent flows at smallest scale where the equations are solved explicitly without any approximation. Running the DNS in bigger domain is compute intensive and lengthy. Hence, prior knowledge of the resources required to run DNS code can be of great advantage. A Numerical Simulation of entrainment and mixing process at cloud interface to study droplet dynamics has been carried out for smaller domains and the time to run code for bigger domain has been projected using linear regression formula. Projected time for bigger domain size will provide researcher to estimate the wall clock time as well as storage requirement, based on available computational resources, to run DNS. The simulation is carried out for different size domains and optimized number of cores has been suggested based on scaling factors for different domains. Further a 17% gain in computational time is achieved by amending algorithm, where only sparse operations are considered in place of full dense data. Use of pnetcdf library has also provided advantages over conventional I/O format.

Presentation Slides:

DNS for large domains: Challenges for computation and storage

Manu Awasthi, "DRAM Organization Aware OS Page Allocation"

Abstract: The applications in HPC and datacenter domains are beginning to have increasingly larger memory footprints running into multiple tens of gigabytes. Contemporary HPC server also have multiple NUMA nodes and the NUMA factor is increasing at an alarming pace with the increase in number of NUMA nodes. As a result, the average cost of main memory access has also been increasing. Furthermore, for a typical HPC application, DRAM access latencies are a function of 1) the memory allocation policies of OS (across NUMA nodes) and 2) data layout in DRAM DIMMs (per-NUMA node).

A DRAM DIMM has a hierarchical structure where it is divided into ranks, banks, rows and columns. There are multiple such DIMMs on each channel and there could be multiple channels emanating from one memory controller. Furthermore, there could be multiple memory controllers per NUMA node. Data layout across DRAM DIMMs and channels is a function of memory controller specific policies like address mapping schemes, which potentially can differ between multiple controllers on the same node.

The OS allocates memory at the granularity of pages. Since the OS has no knowledge of the underlying architecture and organization of per-NUMA node DRAM, each OS page could potentially be concentrated to a subset of available channels, DIMMs, ranks, banks and arrays, putting them under pressure and reducing performance. In order to get most benefit out of existing architectures, it is necessary that the OS allocate memory (at page granularity) while being cognizant of the inner architecture and organization of per-NUMA node DRAM.

In this proposal, we propose mechanisms where the OS, in addition to reducing memory allocation for processes across NUMA nodes, also takes into account the organization of DRAM DIMMs (channels, ranks, banks, rows, columns etc.) for every memory controller. This will help the OS make page allocation decisions which maximize the use of available parallelism, increase locality and hence reduce overall memory access latency, decreasing application runtimes.

Presentation Slides:

DRAM Organization Aware OS Page Allocation

Gouri Kadam and Shweta Nayak, "Implementation of OpenSource Structural Engineering Application OpenSees on GPU platform"

Abstract: GPU computing is emerging as an alternative to CPUs for throughput oriented applications because of their number of cores embedded on the same chip and speed-up in computing. GPUs provide general purpose computing using dedicated libraries such as CUDA (for NVIDIA cards, based on a SIMD architecture that makes it possible to handle a very large number of hardware threads concurrently, and can be used for various purposes. This paper explains customization of a well-known open source earthquake engineering application OpenSees on hybrid platform using GPU enabled open source libraries.

Available GPU enabled version of OpenSees provided by Xinzheng Lu, Linlin Xie (Tsinghua University, China) uses CulaS4 and CulaS5, which use the Cula library and currently supported on window’s platform. Both of these limits the usage of OpenSees as an open source platform. To overcome this, we have modified existing CuSPSolver to use only freely available CUSP library. Speed up improvement is achieved by diverting analysis component to GPU architecture

GPU enablement will help researchers and scientists to use this open-source platform for their research in structural and earthquake engineering domain using OpenSees. Also as there are minor changes required to be done in the input script and no great programming efforts required to run it using GPU enabled OpenSees, these modifications are very user friendly. Speedup around 2.14x is observed when tested with some examples. Different types of examples were studied to compare the results and it has been observed that performance will increases with increase in complexity of the problem. Nodal displacement results of one of the example were compared with CPU and GPU simulations which were matching. This validates the methodology used for GPU enablement. These modifications were also integrated in the mainstream of OpenSees code at Berkley and now available to all structural engineering community. All the study carried out is for single CPU and single GPU. There is lot of scope to extend this work for multi GPU, using other accelerators and using OpenCL.

Presentation Slides:

Implementation of OpenSource Structural Engineering Application OpenSees on GPU platform

Mangala N, Deepika H.V, Prachi Pandey and Shamjith K V, "Adaptive Resource Allocation Technique for Exascale Systems"

Abstract:The future exascale systems are expected to have numerous heterogeneous computing resources in order to achieve computational performance against the energy consumption guidelines. To harness these computing resources it is essential to have hybrid applications that can exploit a combination of CPU and other accelerators for execution. To optimally exploit the cluster performance offered by the heterogeneous architecture, it is necessary to schedule the application based on their exhibited characteristics. However, scheduling hybrid parallel applications is a challenging task as a single resource allocation algorithm should be able to handle multiple aspects of heterogeneity, application characteristics and accelerators’ capabilities while ensuring to achieve optimal resource utilization and good response time to all the jobs. We propose a novel resource allocation technique which makes an in-depth logical scheduling decision based on multiple criteria - characterisation of the hybrid applications, energy conservation, and network topology with resource proximity for allocation. The knowledge of prior job execution is used to self evolve the optimal resource selection mechanism. A unique enhancement has been added in the scheduler to circumvent long waiting time by dynamically adapting applications to alternate resource through re-targeting the device at runtime. This technique helps to reduced waiting time for getting the required resource, and hence improves the turnaround time for the user. The resource allocator considers the challenges of exascale scenario and orchestrates a solution to help HPC users to get their applications executed with less waiting time with better efficiency, and assures effective resource utilization from the HPC resource administrator’s perspective.

Presentation Slides:

Adaptive Resource Allocation Technique for Exascale Systems

Shreya Bokare, Sanjay Pawar and Veena Tyagi."Network coded Storage I/O subsystem for HPC exascale applications"

Abstract: Tremendous data growth in exascale HPC applications will generate variety of

datasets. This will impose two important challenges on the storage I/O system i) efficiently

process large data sets, ii) improved fault tolerance. The exascale storage systems are

expected to be capable enough providing bandwidth guarantee and applying coding scheme

optimized for the type of data set.

Traditional RAID architecture in HPC storage system is being replaced with network coding

techniques to provide optimal fault tolerance for data. There exists variation in network

codes based on traditional erasure (reed-Solomon) with number of libraries

implementations, which notably differ in terms of complexity and implementation for

specific optimizations.

We propose an I/O subsystem to provide bandwidth guarantee along with optimized fault

tolerance for variety of HPC/big datasets. The proposed storage I/O subsystem consists of

software defined storage gateways and controller working along with filesystem MDS. We

propose an I/O caching mechanism with hierarchical storage structure embedded at storage

gateway. The designed caching policy will cache smaller files on faster storage drives (like

SSD’s) eliminating long access latency for smaller sized files. The storage gateways are

running with systematic erasure (RS) code, with preconfigured encoding-decoding

algorithms. At each gateway, network coding (erasure coding encode-decode) is performed

at multiple tiers with specified parameter. Geometric programming is used to calculate

parameters at each tier based on data demand. The proposed system provides bandwidth

guarantee with caching mechanism at hierarchical storage layers. Flexible erasure coding

provides optimized fault tolerance for variety of application datasets.

Future work will focus on design of flexible caching and replacement policy for real time

workload and an adaptive encode-decode algorithm for highly skewed data demand.

Presentation Slides:

NETWORK CODED STORAGE I/O SUBSYSTEM FOR HPC EXASCALE APPLICATIONS

Manavalan R."Application level challenges and issues of processing different frequency, polarization and incidence angle Synthetic Aperture Radar data using distributed computing resources"

Abstract:The role and need of Synthetic Aperture Radar (SAR) technology in various geospatial applications is proved beyond the doubts as SAR can make available critical information about on the filed information with centimeter to millimeter accuracy. The science of processing such SAR data using the distributed computing resources is more than two decade old and as of now been broadly bolstered by worldwide HPC labs for example, ESA’s G-POD (Grid Processing on Demand), Peppers (A Performance Emphasized Production Environment for Remote Sensing), DLR distributed SAR data processing environment, Center for Earth Observation and Digital Earth (CEODE) of Chinese Academy of Sciences (CAS),..etc. This paper discusses the difficulties and issues of Geospatial application users who are working with multiple sets of voluminous temporal SAR data and brings out the importance of developing and deploying all-purpose HPC based infrastructure environment that can meet the expectations of application users. Specific situations which require simultaneous processing of different frequency, polarization and incidence angle SAR data using the distributed HPC resources and related requirements will be discussed. For example during the SAR raw data processing, the need and importance of simultaneous processing and extraction of different polarization images as well as in completing its related post processing tasks and mapping operations will be highlighted. When the same has to be done at a regional scale, mainly to simulate large scale disaster events with various multi-look factors as well as with different post processing filtering options the need of developing and deploying such real time solutions on an exascale computing environment can be well understood. As on date such large scale complex SAR application model supporting the above mentioned expectations is yet to be developed. In line to this this talk will brings out the importance of prototyping such HPC based SAR data processing environment which can support the complete application cycle of real time regional disaster management simulations and its related operations. Any such operational setup certainly needs effective coordination of worldwide space agencies as well as distributed HPC labs.

Presentation Slides:

‘Application level’ challenges and issues of processing different frequency, polarization and incidence angle Synthetic Aperture Radar data using distributed computing resources

Venkatesh Shenoi, Janakiraman S and Sandeep Joshi, "Towards energy efficient numerical weather prediction Scalable algorithms and approaches"

Abstract: The weather forecasting has a major impact on the society. The meteorologists have been using the

numerical weather prediction models for the operation forecast for over several decades ever since

Richardson's attempt towards numerical models for weather prediction. The weather codes have

consumed a good portion of the computational power available in several supercomputing centers.

This has led to the need to improve the computational efficiency of the codes/models to achieve

better resolution moving towards the better accuracy of the forecasts. However, these large scale

computations are possible only at the expense of the huge energy budget due to the increasing

requirement of computational resources as well as the cooling infrastructure required to maintain

them. But with the energy budget fixed at 20 MW it is even more challenging as we are compelled

to move towards energy efficiency of the weather codes. We focus towards the spectral transform

method as case study for this talk. This method has been in use in NCAR and ECMWF for the

operational forecasts.

In this talk, we shall discuss the basics of numerical weather predictions and move towards the test

bed shallow water model to be solved by spectral transform method. The highlights of the approach

with regard to algorithm, its scalability and improvements leading to the reduction in the

computational complexity will be discussed. To conclude, some of the recent efforts on scaling up

the solver for shallow water equations are discussed along with the glimpse of the ongoing efforts in

the ESCAPE project. This talk is inspired by our project proposal on “Scalable algorithmic

approach to spectral transform method” to be pursued under NSM, India.

Presentation Slides:

Towards Energy Efficient Numerical Weather Prediction

14:50-15:10

Narendra Karmarkar, VCV Rao, "MPPLAB(E-Teacher)"

Abstract: HPC has huge future scope for scientific applications, large data bases, AI/deep learning, business applications in financial industry, telecom (particularly 5G) etc. Unfortunately, present systems are serving the market with separate products, in a rather fragmented manner. Challenge is to create a "Unified Architecture" that will unify the user space, following a "Top-down" approach instead of “Bottom-up" approach which forces applications to try to fit their code to peculiarities of particular cpu's, accelerators, system architecture etc. Today's potential of HPC and silicon technology is grossly underutilized due to effort and time spent in tailoring parallel code to specific machines. Incorporation of FPGA-based reconfigurability in general applications is getting delayed unnecessarily. If economic benefits based on what is eminently feasible technologically, are not delivered to the society quickly enough, it slows down investments in further technological enhancements. All HPC users will benefit from this in the long run. At the same time, we are focusing on how very complex code can be put together in shorter time-span, and in such a way that investment in top-level code design is long-lived in face of anticipated changes in successive generations of chips, interfaces etc. Due to vast scope, we will present only a broad overview and elaborate only on couple of aspects. Improving programmer productivity by designing and writing parallel code at multiple levels of abstraction by providing more expressive notations, tools for transforming one level to the next is required. It is also necessary to do away with artificial boundary between hardware description languages and how far traditional compilers reach starting from high level languages. This will ensure more seamless utilization of FPGA-based re-configurability in the unified system architecture. Since hardware aspects are too complex, only one particular aspect related to GPU's will be covered. Initially, we’ll be addressing application of optimization algorithms to economic modelling and telecom systems.

Bio: VCV.Rao received his Master degree in Mathematics from Andhra University, India in 1985 and Ph.D degree in Mathematics from IIT-Kanpur in the year 1993. He is associated with C-DAC since 1993 on High Performance Computing projects. He contributed to design, develop and deploy C-DAC’s PARAM Series of Supercomputers, GARUDA Grid Computing project, Parallel Computing workshops, contributed to PARAM series at premier academic Institutions. Currently, he is an Associate Director in the High-Performance Computing Technologies (HPC-Tech) Group, at C-DAC, Pune.

Bio: Karmarkar received his B.Tech in EE from IIT Bombay in 1978, M.S. from the California Institute of Technology in 1979 and Ph.D. in Computer Science from the University of California, Berkeley in 1983. He is well known for linear programming algorithms - a cornerstone in the field of Linear Programming. He is a Fellow of Bell Laboratories (1987 onwards). In 2006-2007, he served as scientific advisor to the Chairman Tata group, founded CRL and architected "EKA" system, which stands for "Embedded Karmarkar Algorithm”. Currently, he is a Consultant Chief Architect in C-DAC, Pune. He is also a distinguished visiting professor at several institutes such as IISc, and IITs.

15:10-15:30

COFFEE BREAK & NETWORKING

15:10-15:30

COFFEE BREAK & NETWORKING Talks

15:30-17:00

Hands-on session: Using the Interactive Parallelization Tool (IPT) to Generate OpenMP, MPI, and CUDA Programs

Abstract: This talk will provide an overview of a high-productivity parallel programming tool known as the Interactive Parallelization Tool (IPT), which assists in efficiently parallelizing the existing C/C++ applications using any of the following parallel programming models: Message Passing Interface (MPI), OpenMP, and CUDA. For assisting in parallelization, IPT uses its knowledgebase of parallel programming expertise (encapsulated as design templates and rules), and relies on the specifications (i.e., what to parallelize and where) as provided by users. IPT can be used for the self-paced learning of different parallel programming paradigms. It helps in understanding the differences in the structure and performance of the parallel code generated for different specifications while using the same serial application. A hands-on session on parallel programming using IPT will also be conducted.

Presentation Slides:

Using the Interactive Parallelization Tool to Generate Parallel Programs (OpenMP, MPI, and CUDA )

Ritu Arora, TACC

15:30-17:00

Hands-on session: Using the Interactive Parallelization Tool (IPT) to Generate OpenMP, MPI, and CUDA Programs

Abstract: This talk will provide an overview of a high-productivity parallel programming tool known as the Interactive Parallelization Tool (IPT), which assists in efficiently parallelizing the existing C/C++ applications using any of the following parallel programming models: Message Passing Interface (MPI), OpenMP, and CUDA. For assisting in parallelization, IPT uses its knowledgebase of parallel programming expertise (encapsulated as design templates and rules), and relies on the specifications (i.e., what to parallelize and where) as provided by users. IPT can be used for the self-paced learning of different parallel programming paradigms. It helps in understanding the differences in the structure and performance of the parallel code generated for different specifications while using the same serial application. A hands-on session on parallel programming using IPT will also be conducted.

Presentation Slides:

Using the Interactive Parallelization Tool to Generate Parallel Programs (OpenMP, MPI, and CUDA )

Ritu Arora, TACC

17:00-17:30

Parallel programming contest (MPI/OpenMP/CUDA) - parallelize programs with or without IPT - C/C++ as the base language - prizes for top contestants - The winner award is Nvidia Tesla K40C GPU

17:00-17:30

Parallel programming contest (MPI/OpenMP/CUDA) - parallelize programs with or without IPT - C/C++ as the base language - prizes for top contestants - The winner award is Nvidia Tesla K40C GPU

17:30-18:00

"Global Preparedness for Exascale Computing", Moderator: Vipin Chaudhary. Panelists: Bharat Kumar, Hiroyuki Takizawa, Nash Palinaswamy, VCV Rao

17:30-18:00

"Global Preparedness for Exascale Computing", Moderator: Vipin Chaudhary. Panelists: Bharat Kumar, Hiroyuki Takizawa, Nash Palinaswamy, VCV Rao

18:00-19:00

NETWORKING RECEPTION AND PRIZE WINNER ANNOUNCED

18:00-19:00

NETWORKING RECEPTION AND PRIZE WINNER ANNOUNCED